The Bot as Research Tool study served as the foundation of my research into how interfaces can support conversational symbiosis amongst humans and artificial agents in the context of intimate relationships. My goal throughout this study was to better understand the degree of comfort individuals have with such interfaces, the possible affordances it can offer, opportunities for including feedback, and potential integrations of such an experience into the everyday life of intimate couples.

Kinda Human / Bot as Research Tool

Study Protocol

The Bot as Research Tool guided a participant through a 30 minute user interview/walkthrough that unfolded in two stages.

First Stage

The participant was introduced to a scenario and told to imagine that his/her partner had made plans for them without asking him/ her about those plans beforehand. They are then told to message back and forth with their partner using a provided messaging tool (i.e., apple) that I prototyped for this study. While messaging, the participant was told to talk through their interactions (i.e., "What is working? What is not working?").

Second Stage

I asked the participant questions about their responses from the earlier messaging activity. I used Quicktime to record the screen of the messaging tool, so that I could analyze the interaction later.

Study Administration

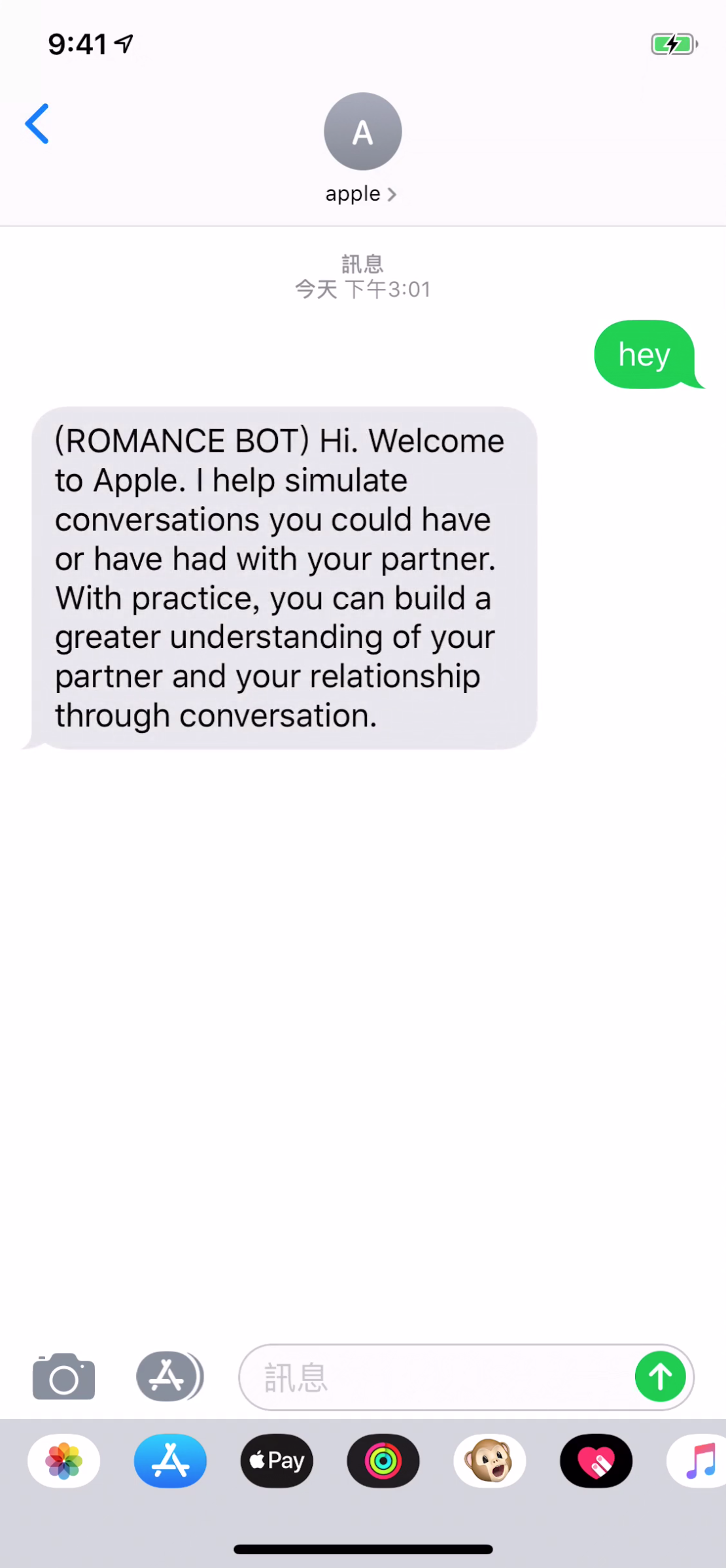

I specifically designed and built apple for this study. apple enables people to simulate conversations they have had or might have with their partner. With apple one may build a greater understanding of their partner and relationship through conversation, facilitated by artificial agents, than what currently exists.

How apple Works

apple was designed for an individual to simulate a conversation based on a topic that could lead to argument between that individual and their partner. It functioned as an SMS bot via Twilio. Each conversation consisted of four participants: the user, the apple bot that introduces you to apple and provides help, a simulated partner bot named Chris, and a mediator bot, which is an objective, non-judgmental, accepting, and thoughtful third party. The mediator uses a basic framework for conversation based on Dubberly and Pangaro's "Process of Conversation" and provides relationship advice based on functional and dysfunctional communication patterns. The bot utilizes Dialogflow to understand what users are saying, decide if they are successfully navigating the "Process of Conversation" (Dubberly & Pangaro, 2009), and establish advice that is most relevant.

Initial Interaction With apple

When users first message apple they are introduced to apple the bot and its capabilities.

Users can then simulate two types of conversations-- a conversation with just their simulated partner or a conversation with both their simulated partner and a mediator bot.

Once a user decides on the type of conversation, the user is taken into the simulation and told, "You are now entering an alternative world. Your partner is just about to text you about the event next Saturday."

Once in that world, a user talks to his/her partner. The user and Chris go back and forth for a short amount of time before Chris, his/her partner, asks to include the mediator bot. The mediator bot introduces themselves and the four stages of conversation (i.e., sharing phase, exchange phase, evolution phase, response phase).

The mediator bot then facilitates a productive conversation between the two parties. It utilizes strategies from intimate relationship literature, which in turn builds credibility for the mediator bot.

The mediator bot introducing itself

The mediator bot providing advice based on functional and dysfunctional communication patterns

By the end of the conversation, a user is intended to be able to reflect on his/her own conversations (i.e., see how strategies mentioned in the chat could be used and where they might have made mistakes with a partner in the past).

The user can then redo the simulation or choose from a number of other simulations.

Study Challenges

There were a number of challenges I confronted when designing and building this bot. Below is an abridged list of such challenges.

- The Timing of the TextsIdeally, I wanted to replicate the timing of a real conversation, but I struggled to develop a way to do that with Twilio since Twilio does not provide developers with the ability to send texts at specific points in time.

- The Inability to Differentiate IndividualsIdeally, users would be able to easily scan and differentiate different individual’s messages within the chat, so that possible confusion could be avoided. Again, I was unable to achieve that with Twilio. Twilio does not allow for customization of the phone number that sends the messages. Due to this limitation I used a technique inspired by screenplays to differentiate roles.

- Visualizing the Stages of a ConversationIdeally, a user would be able to see their position in a conversation relative to the whole thread, the stages they have completed, and those they have yet to complete. I was unable to visualize the stages of a conversation with the tools and the customization afforded through current technology while creating the bot.

Top: Current Technique Inspired by Screenwriting, Bottom: More Optimal Technique

Study Outcomes

The majority of participants that interacted with apple viewed the simulation as relatable; they could imagine themselves in that specific conversation. Other participants saw Chris, the simulated partner, as highly irritable and unrelatable. Regardless, all participants saw that apple provided value in sharing information that an intimate partner might not be aware of and would be beneficial the next time they communicate with their partner.

Study Synthesis

apple revealed a number of insights that would inform decisions I made throughout the year. These insights included that artificial agents have the potential to provide a place for individual and joint reflection, serve as an outside perspective, guide a conversation, act as a calming presence, be an instrument for detecting sentiment, and hone in on specific pieces of language.

apple not only revealed the various areas of an intimate relationship that an artificial agent could benefit, but also that users would willingly employ artificial agents in an intimate context, that they often lack awareness of relationship frameworks, tips, and strategies, and that they could become over-reliant on tools if they see that tool as a definitive source.

The study also revealed several insights pertaining to frames as organizational principle(s) (Dorst , 2015, p. 63) and as a tool to design agents capable of creating an environment for joint reflection or guiding a conversation. These insights include that a designer would need to employ clear frame(s) that would help users establish realistic expectations of that agent, while also acknowledging that a unique set of frames may be necessary to address different forms and kinds of conversation. At the same time, it is essential to note that the frame(s) employed by an interface could influence a tool's level of intervention, mode of activation, and level of integration (i.e., a tool framed as passive should not intervene every 5 minutes).

- A contextual awareness of an artificial agent is highly influential on the frame(s) employed by that agent (i.e., successful interfaces rely on an awareness of a situation).

- By aligning the form of an interface and the frame(s) employed by that interface, one could foster a consistent experience.

- When an agent is in the presence of both partners, it would be wise for that agent take a neutral perspective so that each partner feels equally heard.

- An agent might be more successful intervening in a relationship if the visibility of that agent is dependent on the flow of a conversation (i.e., if an agent is regularly intervening, the impact of those interventions is diminished).

- By making data use visible to all, users would likely understand the boundaries and capabilities of an agent.

Ultimately, apple served as a probe to answer a number of research questions pertaining to my thesis. It also served as an example of an artifact that takes advantage of simulation to enable a user to picture an effective action and enhance their ability to make more effective decisions in the future.